Generative AI - A concise primer for non-experts

A concise introduction into the workings of large language models is presented. We start with an introduction to the attention mechanism, the core of the transformer architecture, which is then followed by a discussion of the steps needed to engineer the base model, a generatively pretrained transfromers (GPT), to a working chatbot like ChatGPT.

Information processing vs. information routing. Before the advent of transformers, deep learning architectures were based on neural networks.

A prominent example are convolution nets, which see widespread use for image classification.

Neural networks process information using a non-linear transformation of their combined input. Transformers are based in contrast on a mechanism denoted "attention", which was introduced in the form of ‘self-attention’ in the by-now-famous article from 2017 Attention is all you need.

With attention, information is routed, in addition to being processed. Focusing attention to certain parts of upstream layers means that only the information originating from these parts is processed further, but not the information streams arriving from other parts.

Transformer architectures are hence qualitatively different from the neural nets used in classical deep learning approaches.

ChatGPT and most modern language processing software are based on various versions of the attention mechanism. Indeed, GPT stands for "Generative Pretrained Transformer".

Query, Key and Value. Every representation of a word, the token, generates three objects, denoted Query, Key and Value.

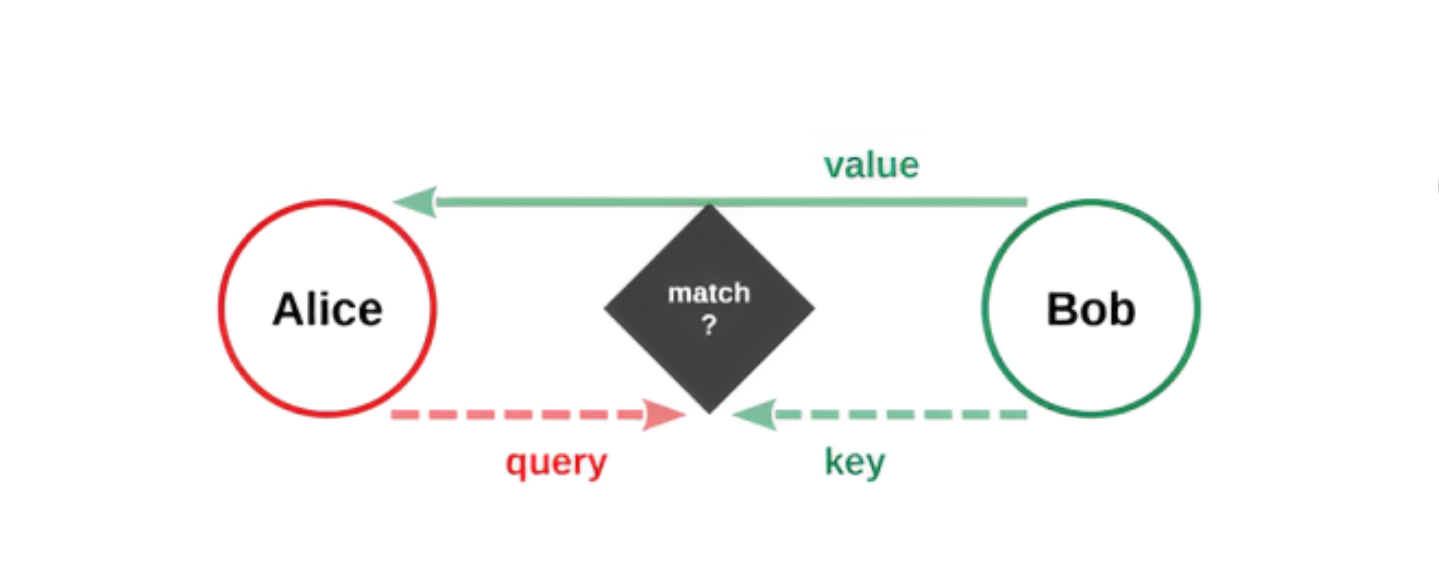

On a basic level, attention is akin to a database query. Consider two tokens, Alice and Bob, situated somewhere within the transformer architecture.

First, Alice sends her query object to Bob, with Bob comparing the query received with its key. If a match is found, Bbb sends something back to Alice, namely his value object. The whole process is illustrated in Fig. 1.

Value objects correspond to the information associated with a token at a given time, which means that Bob’s value object will be processed by Alice only when query and key match.

This is the core of information routing within transformer architectures. During training, the parameters used to generate the Query, Value and Key objects are adapted.

It is not important which concrete implementation is used for the definition of the matching condition, only that the criterion used is never changed.

The simplest version, the dot product between two vectors, does the job. The outcome of the query process is in practice a graded process, and not the allor-nothing routing used here for illustrational purposes.

The Query-Key-Value attention mechanism. Tokens, viz words, are the constituent units of transformers, with individual layers containing thousands of tokens. Consider two tokens, say Alice and Bob, with the question being whether Bob has information that is relevant to Alice. In order to find out, Alice sends a query to Bob, which Bob compares with its key. If there is a match, Alice receives Bob’s value object, viz an information package. Alice is said to ’pay attention’ to Bob.

Base model. Transformer models are given texts to process, lots of text, with the only task to predict the next word – at every single step. One speaks of ’self-supervised’ learning.

The different versions of GPT, namely GPT-1, GPT2, GPT-3 and GPT-4, have increasing numbers of internal parameters, of the order of 108 / 109 / 1011 and 1012.

The majority of these parameters are used for the encoding of the Q/K/V objects, which are present in large numbers In addition, there is a corresponding number of linear connections.

During training, the content of the training texts is encoded implicitly, mostly within the Q/K/V objects.

What is a question? The base model, the generatively pretrained transformer, does not know about ’questions’ and ’answers’. When presented with a prompt (the text input), it will merely try to complete the input word by word.

For most applications, the output generated will have nothing to do with an informative answer. As a first step, one therefore needs to teach the base model the notion of ’questions’ and ’answers’.

To do so, the model is presented 104 − 105 handcrafted (by humans) high-quality examples of question-answer pairs.

Once trained with this comparatively small number of question-answer examples, the model is capable to produce meaningful responses to a near infinite number of possible prompts.

Value alignment. Once the model is able to generate meaningful responses, quality testing is performed.

At this step, denoted RLHF (reinforcement learning from human feedback), humans evaluate the quality of responses generated to a wide range of prompts, both with respect to the information content and with respect to a set of value requirements (like political correctness). Such a database of sored prompt-response pairs is generated.

This database is used to train a second transformer, with the second transformer training subsequently the chatbot in the making. The outcome is a ’foundation model’, viz a valuealigned chatbot like ChatGPT.

Learning from few examples. To be further useful, the foundation model can be supplemented with application modules. The additional circuits, called ‘downstream tasks’ in machine learning, can be suitably adapted classical neural networks.

An alternative is Lora (low-rank adaptation), which consists of adding a comparatively small number of additional parameters to the foundational model, which is otherwise kept frozen.

The key point is that application modules can be trained in general very fast, using only a few representative examples. This is possible because downstream tasks are designed to make use of the huge amount of causally structured information stored implicitly within the foundation model. One speaks of ‘few-shot learning’.

Prompt engineering. Using suitably structured prompts one can adapt the foundation model to a wide range of tasks without the need of additional application modules. This approach is called "prompt engineering".

An example for structured prompting would be to present the foundation model several typical sentences together with suitable solutions or answers.

Say that we want the chatbot to generate Twitter hashtags and that there are three hashtags, #first, #second and #third, at disposal. Teaching via prompting works by presenting the system a few typical Twitter posts for each of the three hashtags.

This should be enough for the model to be able to generate the appropriate hashtags for further posts.

AI psychology. A remarkable property of large language models is that they tend to produce better results when asked to list their reasoning, viz the intermediate steps. This approach can be taken a step further by asking the system to solve a certain problem, e.g. a logical reasoning problem, several times, listing each time the intermediate chain of thoughts.

Next one asks the chatbot to rank its own thinking, viz the several versions of intermediate reasonings produced during the invidual problem solving attempts. In general the system will select the best thought, improving performance in part drastically.

This approach, denoted "tree of thoughts", can be regarded to represent a first step towards AI psychology.

Motivating the system to reflect about itself tend to improve performance.

Software availability. Anyone familiar with Python, the programming language commonly used for machine-learning applications, and a standard ML software environment, like Pytorch, can program his/her own transformer from scratch. In addition, entire architectures are freely available under suitable open-source licenses.

Indeed, it has been speculated that the epoch of large language models may be impacted strongly by grass-root programming efforts.

This would hold in particular for domain-specific applications that can be implemented with somewhat modest computing resources.

-

FileAI PRIMER PDF VERSION.pdf (804.5 KB)

IEP@BU does not express opinions of its own. The opinions expressed in this publication are those of the authors. Any errors or omissions are the responsibility of the authors.